API Reference

Complete API documentation for frontend integration

Complete API documentation for frontend integration.

POST /api/scrape

Scrape a URL and convert to Markdown or HTML.

Request

{

url: string // Required

engine?: 'auto' | 'http' | 'browser' // Default: 'auto'

format?: 'raw.html' | 'clean.html' | 'markdown' | 'pdf' // Default: 'markdown'

downloadImages?: boolean // Default: false (Fetches images to local storage)

includeMetadata?: boolean // Default: true

timeout?: number // Default: 30000ms

enableAI?: boolean // Default: false (Extracts main content and removes clutter)

selectors?: { title?: string, content?: string }

}Response

{

success: true

url: string

format: 'raw.html' | 'clean.html' | 'markdown' | 'pdf'

engine: 'http' | 'browser'

content: string // Scraped data (Markdown, HTML, or PDF base64)

rawHtml?: string // Included when format='markdown'

title?: string

metadata?: {

convertedAt: string

timing: { fetchDurationMs, cleanDurationMs, totalDurationMs }

stats: { rawHtmlSizeBytes, markdownSizeBytes, compressionRatio }

images?: { // When downloadImages=true

download: boolean

totalBytes: number

items: { originalUrl, localPath }[]

}

}

discoveredLinks?: { // Internal links discovered on the page

url: string

text?: string

}[]

}Example

curl -X POST http://localhost:3000/api/scrape \

-H "Content-Type: application/json" \

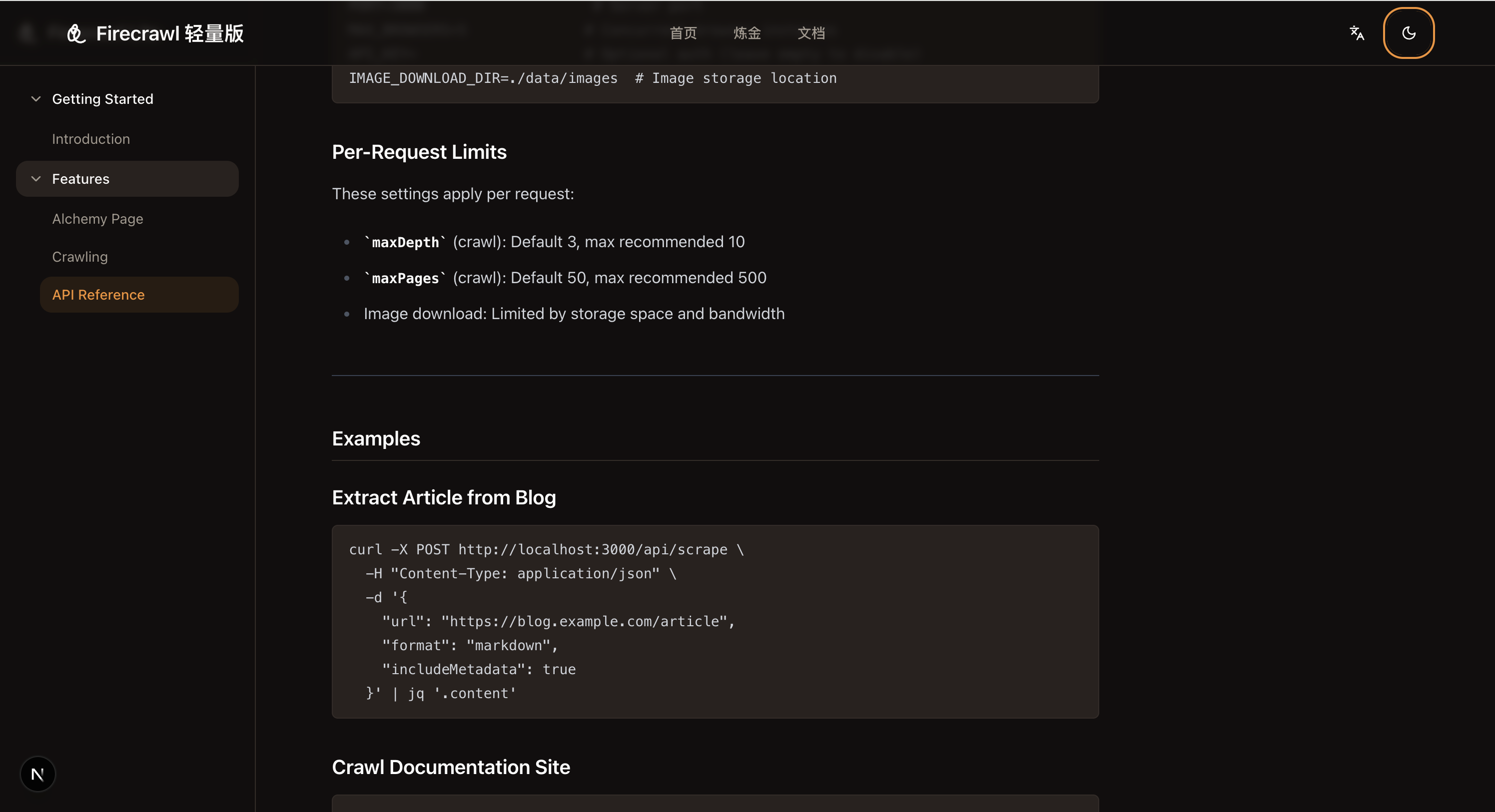

-d '{"url": "https://example.com", "format": "markdown"}'POST /api/crawl

Crawl a website recursively. Supports JSON (wait) and SSE (real-time).

Request

{

url: string // Required - Starting URL

maxPages?: number // Default: 50, Max: 500

maxDepth?: number // Default: 3, Max: 10

concurrency?: number // Default: 2, Max: 10

pathPrefix?: string // Filter URLs by path (e.g., '/docs/')

engine?: 'auto' | 'http' | 'browser'

format?: 'markdown' | 'clean.html' | 'raw.html' | 'pdf'

downloadImages?: boolean

}JSON Mode (Default)

Wait for crawl to complete:

curl -X POST http://localhost:3000/api/crawl \

-H "Content-Type: application/json" \

-d '{"url": "https://example.com", "maxPages": 10}'Response:

{

success: true

baseUrl: string

totalPages: number

pages: [{ url, title, content }]

sitemap?: {

crawledUrls: string[]; // URLs that were crawled

discoveredUrls: DiscoveredLink[]; // Links discovered but not crawled

stopReason: string; // Stop reason (maxPages/maxDepth/drained)

}

}SSE Mode (Real-time Progress)

Add Accept: text/event-stream header for real-time updates:

curl -X POST http://localhost:3000/api/crawl \

-H "Content-Type: application/json" \

-H "Accept: text/event-stream" \

-d '{"url": "https://example.com", "maxPages": 50}'Event Types:

// Detecting page type (auto mode)

data: {"type": "detecting", "completed": 0, "total": 50, "currentUrl": "https://..."}

// Engine upgrade event (auto mode detected browser needed)

data: {"type": "engine_upgrade", "completed": 1, "total": 50, "upgradeReason": "spa_detected"}

// Progress update

data: {"type": "progress", "completed": 5, "total": 50, "currentUrl": "https://...", "data": {...}}

// Final result

data: {"type": "complete", "data": { success, baseUrl, totalPages, pages, sitemap }}

// Error

data: {"type": "error", "error": "message"}JavaScript SSE Client

async function crawlWithProgress(url, maxPages, onProgress) {

const res = await fetch('/api/crawl', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Accept': 'text/event-stream'

},

body: JSON.stringify({ url, maxPages })

});

const reader = res.body.getReader();

const decoder = new TextDecoder();

let buffer = '';

let result = null;

while (true) {

const { done, value } = await reader.read();

if (done) break;

buffer += decoder.decode(value, { stream: true });

const lines = buffer.split('\n');

buffer = lines.pop() || '';

for (const line of lines) {

if (!line.startsWith('data: ')) continue;

const event = JSON.parse(line.substring(6));

if (event.type === 'progress') {

onProgress(event.completed, event.total, event.currentUrl);

} else if (event.type === 'complete') {

result = event.data;

} else if (event.type === 'error') {

throw new Error(event.error);

}

}

}

return result;

}

// Usage

const result = await crawlWithProgress(

'https://docs.example.com',

100,

(done, total, url) => console.log(`${done}/${total}: ${url}`)

);POST /api/convert

Convert HTML file or Markdown text to other formats.

Request

Supports two modes:

Mode 1: File Upload (HTML → Markdown)

- Content-Type:

multipart/form-data - Body:

file(binary HTML file)

curl -X POST http://localhost:3000/api/convert \

-F "file=@page.html;type=text/html"Mode 2: JSON Body (Markdown → PDF)

- Content-Type:

application/json

curl -X POST http://localhost:3000/api/convert \

-H "Content-Type: application/json" \

-d '{"markdown": "# Hello World", "format": "pdf"}'POST /api/download

Create a ZIP archive containing Markdown content and local images.

Request

{

markdown: string // Required

images?: { originalUrl: string, localPath: string }[] // Optional

title?: string // Default: 'export'

}Response

Binary ZIP file.

Example

curl -X POST http://localhost:3000/api/download \

-H "Content-Type: application/json" \

--output export.zip \

-d '{

"markdown": "# Hello\n",

"images": [{"originalUrl": "...", "localPath": "img/1.png"}],

"title": "My Page"

}'POST /api/download/batch

Batch download multiple pages, generating a ZIP archive containing all Markdown files and a shared images directory.

Request Parameters

| Parameter | Type | Required | Description |

|---|---|---|---|

| pages | array | Yes | Array of page data |

| pages[].url | string | Yes | Original page URL |

| pages[].markdown | string | Yes | Extracted Markdown content |

| pages[].title | string | No | Page title (used for filename generation) |

| pages[].images | array | No | Image mapping array |

Request Example

curl -X POST http://localhost:3000/api/download/batch \

-H "Content-Type: application/json" \

-d '{

"pages": [

{

"url": "https://example.com/page1",

"markdown": "# Page 1\n\nContent here...",

"title": "Page 1"

},

{

"url": "https://example.com/page2",

"markdown": "# Page 2\n\nMore content...",

"title": "Page 2"

}

]

}' --output batch.zipResponse

- Content-Type:

application/zip - Filename: Generated based on the first page's domain, e.g.,

example.com-batch.zip - ZIP Structure:

example.com-batch.zip ├── page-1.md ├── page-2.md └── images/ ├── image1.png └── image2.jpg

GET /api/images/*

Serve images downloaded during scrape/crawl when downloadImages=true.

Usage

Images are stored locally and can be accessed via this endpoint. The localPath returned in metadata can be appended to /api/images/.

Example

curl http://localhost:3000/api/images/2023/10/page-id/image.png --output image.pngGET /api/health

Health check endpoint.

curl http://localhost:3000/api/health

# {"status":"ok","version":"..."}Engine Selection

| Engine | Speed | Use Case |

|---|---|---|

auto |

~1.2s avg | Most cases (default) |

http |

~170ms | Static HTML sites |

browser |

~5s | JavaScript SPAs |

Auto mode tries HTTP first, upgrades to browser if content is missing.

Output Formats

| Format | Size | Best For |

|---|---|---|

markdown |

Smallest | LLM processing, reading |

clean.html |

Medium | Web display |

raw.html |

Largest | Debugging |

pdf |

Variable | Offline reading, archiving |

Metadata Frontmatter

When format='markdown' and includeMetadata=true:

---

url: https://example.com

title: Page Title

converted_at: 2025-11-17T12:34:56.789Z

engine: browser

timing:

fetch_duration_ms: 250

total_duration_ms: 300

stats:

raw_html_size_bytes: 125000

markdown_size_bytes: 12000

compression_ratio: 0.096

---

# Actual Markdown Content...Error Handling

// Error response

{

success: false

url: string

error: string

}

// Common errors:

// - 400: Invalid URL or parameters

// - 403: Site blocked access

// - 404: Page not found

// - 408: Timeout

// - 500: Internal errorRetry Strategy

async function scrapeWithRetry(url, retries = 3) {

for (let i = 0; i < retries; i++) {

try {

return await scrape(url);

} catch (e) {

const status = parseInt(e.message.match(/\d+/)?.[0]);

if (status && status < 500) throw e; // Don't retry 4xx

if (i < retries - 1) {

await new Promise(r => setTimeout(r, 1000 << i)); // Exponential backoff

}

}

}

}Authentication (Optional)

If backend has API_KEY set:

fetch('/api/scrape', {

headers: {

'Content-Type': 'application/json',

'Authorization': 'Bearer YOUR_API_KEY'

},

body: JSON.stringify({ url })

});Rate Limiting

- Crawl: 1 request/second (polite crawling)

- Scrape: No limit by default

- Configure in deployment if needed